iPhones will soon be able to detect child abuse images

Read in other languages:

Apple announced a few new features that will ramp up the fight against child abuse images for its operating systems, just hours after the Financial Times newspaper revealed this news. Updated versions of iOS, iPadOS, macOS, and watchOS are expected to roll out later this year with feature tools to combat the spread of such content.

TL;DR

- Messages app will alert you of sexually explicit content.

- Material with child abuse will be identified in iCloud Photos.

- Siri and Search will have additional tools to warn against child abuse.

The Financial Times published this news on Thursday afternoon (August 6), and shortly after that, Apple confirmed the new system to prevent child abuse with an official statement and a technical report (PDF) of how this feature will work.

Beginning with iOS 15, iPadOS 15, watchOS 8, and macOS Monterey - initially in the US only, these updated devices will have additional features to prevent and warn against the spread of child abuse content.

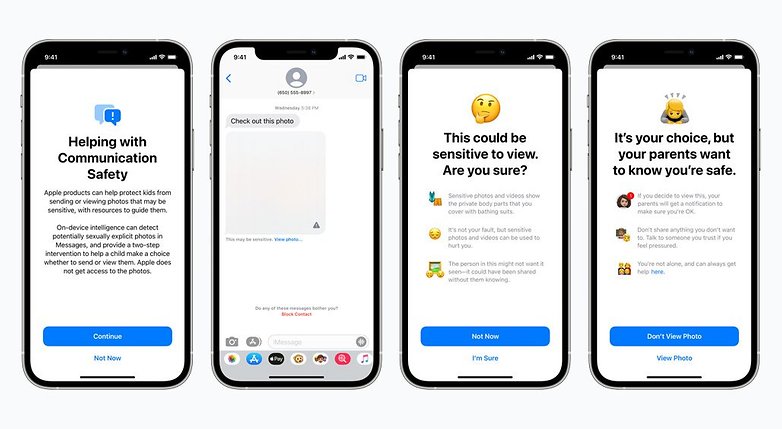

Alerts for parents and guardians in Messages

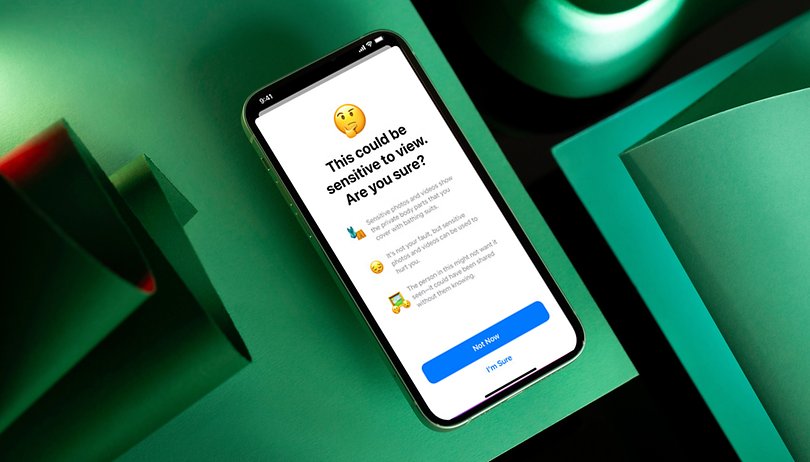

The Messages app will be able to detect the receipt and sending of sexually explicit images. In the case of received images, they will remain hidden with a blur effect, and can only be viewed after agreeing to an alert that the content could be sensitive to view (as seen in the third screen below).

Parents or guardians will also have the option to be alerted should the child view explicit content identified by Messages which, according to Apple, will perform the analysis on the device itself without the company having access to the content.

This new feature will be integrated into the existing family account options in iOS 15, iPadOS 15, and macOS Monterey.

Detection in iCloud Photos

The feature that should attract the most attention is the new technology that was announced by Apple: the ability to detect images containing scenes of child abuse in iCloud. This tool will be able to identify images that have been pre-registered by NCMEC (National Center for Missing and Exploited Children, a US organization for missing and exploited children).

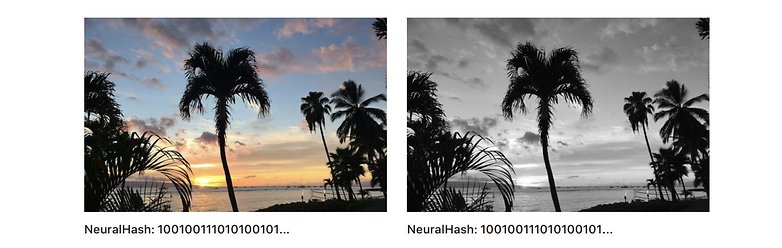

Despite identifying files that are stored in the cloud, the system will function by cross-checking data on the device itself, a concern that has been addressed by Apple many times, by using hashes (identifiers) of images from NCMEC and other organizations.

According to Apple, the hash does not change should the file size change, or even by removing colors or changing the compression level of the image. The company will be unable to interpret the analysis results unless the account exceeds a certain degree (which remains unknown) of positive identifiers.

Apple also claimed that this system has a probability of error of less than one per one trillion per year. By identifying a potential red flag, it will evaluate the images analyzed and should there be a positive red flag identified, a report is sent to NCMEC after deactivating the account, a decision that can be appealed by the owner of the profile.

Even before the official announcement of the new tool was made, encryption experts warned about the risk of this new feature which might open the door for the use of similar algorithms for other purposes, such as spying by dictatorial governments, and bypassing the challenges found in end-to-end encryption systems.

For now, Apple has not indicated when (or even if) the system will be available in other regions. There are still questions such as the adequacy found in current laws across the world.

Siri also plays a role

This collection of new features is rounded off by Siri in conjunction with the search system across its various operating systems, as they will now provide information about online safety, including links that allow you to report instances of child abuse.

Like all of the other features, this additional feature should initially be offered only in the United States, and there is no timeframe as to when it will be made available in other regions - if ever.

Do take note that most countries should have a dedicated toll-free phone number to call on an anonymous basis to report cases of abuse and neglect against children and adolescents, with this service being available 24 hours a day, 7 days a week. Apart from that, the individual country's Ministry of Women, Family and Human Rights (or its equivalent) should also be open to any similar reports.

Source: Apple

The concept is noble; the implementation evil.